Difference between revisions of "Tools: Team Three"

(Created page with "'''Team three: visualisation of historical data''' <u>Team summary</u> We will explore how visualisation techniques can be used by historians for multiple purposes - to impr...") |

(→Useful Links) |

||

| (23 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

'''Team three: visualisation of historical data''' | '''Team three: visualisation of historical data''' | ||

| − | + | __TOC__ | |

| + | |||

| + | ==Team summary== | ||

We will explore how visualisation techniques can be used by historians for multiple purposes - to improve the discoverability of data, to highlight and analyse linkages in data, and to aid the comprehension of data. | We will explore how visualisation techniques can be used by historians for multiple purposes - to improve the discoverability of data, to highlight and analyse linkages in data, and to aid the comprehension of data. | ||

| Line 11: | Line 13: | ||

Team members will have an opportunity to work with, and improve upon, a MarineLives dataset for C17th ship sailing times between ports and dwell time in ports | Team members will have an opportunity to work with, and improve upon, a MarineLives dataset for C17th ship sailing times between ports and dwell time in ports | ||

| + | ---- | ||

| + | |||

| + | ===Stanford Named Entity Tagger=== | ||

| + | |||

| + | [[File:Stanford NETagger HCA1368f35r 26062016.PNG|600px|thumb|left|Stanford Named Entity Tagger - Extract from [[HCA 13/68 f.35r Annotate|HCA 13/68 f.35r]]]] | ||

| + | |||

| + | For an assessment of the accuracy of the Standford Named Entity Tagger and Standford Named Entity Recogniser using English High Court of Admiralty data see [https://www.academia.edu/6551336/Dominique_Ritze_Caecilia_Zirn_Colin_Greenstreet_Kai_Eckert_Simone_Paolo_Ponzetto_Named_Entities_in_Court_The_Marine_Lives_Corpus_May_2014_ Dominique Ritze et al., Named Entities in Court: The MarineLives Corpus (May, 2014)] | ||

| + | ---- | ||

| + | |||

| + | ==High Court of Admiralty dataset== | ||

| + | |||

| + | [[File:Timelines 26062016.PNG|600px|thumb|left|High Court of Admiralty 1650s travel time dataset]] | ||

| + | |||

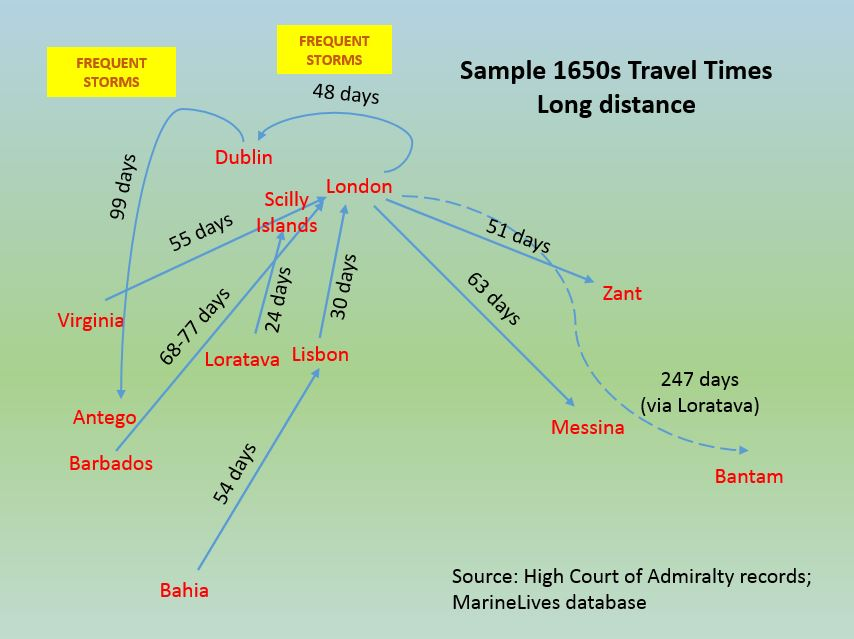

| + | [[File:Long Distance Traveltimes 26062-16.PNG|600px|thumb|left|Long distance travel time - simple visualisation]] | ||

| + | |||

| + | [[File:Short Distance Travel Times 26062016.PNG|600px|thumb|left|Short distance travel time - simple visualisation]] | ||

| + | |||

| + | [ADD DATA] | ||

| + | |||

| + | ---- | ||

| + | ==Visualisation tools== | ||

| + | |||

| + | [ADD DATA] | ||

| + | |||

| + | ---- | ||

| + | ==Names Entity Recognisers== | ||

| + | |||

| + | [ADD DATA | ||

| + | ---- | ||

| + | ==Useful Links== | ||

| + | |||

| + | [https://en.wikipedia.org/wiki/Natural_language_processing Natural Language Processing Wikipedia article] | ||

| + | |||

| + | [https://www.academia.edu/6551336/Dominique_Ritze_Caecilia_Zirn_Colin_Greenstreet_Kai_Eckert_Simone_Paolo_Ponzetto_Named_Entities_in_Court_The_Marine_Lives_Corpus_May_2014_ Dominique Ritze et al., Named Entities in Court: The MarineLives Corpus (May, 2014)] | ||

| + | |||

| + | [http://marinelives-theshippingnews.org/blog/2014/05/22/how-long-did-it-take/ Colin Greenstreet, 'How long did it take?', The Shipping News blog article, Mat 22, 2014] | ||

| + | |||

| + | [http://abbymullen.org/named-entity-extraction-productive-failure/ Abby Mullen, Named Entity Extraction: Productive Failure? April 18th, 2015. Blog article] | ||

| + | - Discusses automatic extraction of place names through named entity recognition, using the software package "r" | ||

| + | - Extracts place names from the 6-volume document collection 'Naval Documents Related to the United States Wars with the Barbary Powers' | ||

| + | - Identifies problem of confusion between place names and US naval ships named after places, e.g. the USS Chesapeake | ||

| + | |||

| + | ---- | ||

| + | ===Stanford Natural Language Processing Links=== | ||

| + | |||

| + | [http://www-nlp.stanford.edu/software/jenny-ner-2007.ppt Jenny Rose Finkel, Stanford University, March 9, 2007] | ||

| + | |||

| + | [https://sergey-tihon.github.io/Stanford.NLP.NET/StanfordCoreNLP.html Stanford CoreNLP for .NET] | ||

| + | |||

| + | - Stanford CoreNLP provides a set of natural language analysis tools which can take raw English language text input and give the base forms of words, their parts of speech, whether they are names of companies, people, etc., normalize dates, times, and numeric quantities, and mark up the structure of sentences in terms of phrases and word dependencies, and indicate which noun phrases refer to the same entities. Stanford CoreNLP is an integrated framework, which makes it very easy to apply a bunch of language analysis tools to a piece of text. Starting from plain text, you can run all the tools on it with just two lines of code. Its analyses provides the foundational building blocks for higher-level and domain-specific text understanding applications. | ||

| + | |||

| + | Stanford CoreNLP integrates all Stanford NLP tools, including the part-of-speech (POS) tagger, the named entity recognizer (NER), the parser, the coreference resolution system, and the sentiment analysis tools, and provides model files for analysis of English. The goal of this project is to enable people to quickly and painlessly get complete linguistic annotations of natural language texts. It is designed to be highly flexible and extensible. With a single option, you can choose which tools should be enabled and which should be disabled. | ||

| + | |||

| + | [http://nlp.stanford.edu:8080/parser/ Stanford Parser for ,NET (A statistical parser)] | ||

| + | |||

| + | - A natural language parser is a program that works out the grammatical structure of sentences, for instance, which groups of words go together (as "phrases") and which words are the subject or object of a verb. Probabilistic parsers use knowledge of language gained from hand-parsed sentences to try to produce the most likely analysis of new sentences. These statistical parsers still make some mistakes, but commonly work rather well. Their development was one of the biggest breakthroughs in natural language processing in the 1990s. You can try out the Stanford parser online. | ||

| + | |||

| + | [http://nlp.stanford.edu/software/CRF-NER.shtml Stanford Natural Language Processing Group: Software > Stanford Named Entity Recognizer (NER)] | ||

| + | |||

| + | - "Stanford NER is a Java implementation of a Named Entity Recognizer. Named Entity Recognition (NER) labels sequences of words in a text which are the names of things, such as person and company names, or gene and protein names. It comes with well-engineered feature extractors for Named Entity Recognition, and many options for defining feature extractors. Included with the download are good named entity recognizers for English, particularly for the 3 classes (PERSON, ORGANIZATION, LOCATION). | ||

| + | |||

| + | Stanford NER is also known as CRFClassifier. The software provides a general implementation of (arbitrary order) linear chain Conditional Random Field (CRF) sequence models. That is, by training your own models on labeled data, you can actually use this code to build sequence models for NER or any other task. (CRF models were pioneered by Lafferty, McCallum, and Pereira (2001); see Sutton and McCallum (2006) or Sutton and McCallum (2010) for more comprehensible introductions.) | ||

| + | |||

| + | The original CRF code is by Jenny Finkel. The feature extractors are by Dan Klein, Christopher Manning, and Jenny Finkel. Much of the documentation and usability is due to Anna Rafferty. More recent code development has been done by various Stanford NLP Group members. | ||

| + | |||

| + | Stanford NER is available for download, licensed under the GNU General Public License (v2 or later). Source is included. The package includes components for command-line invocation (look at the shell scripts and batch files included in the download), running as a server (look at NERServer in the sources jar file), and a Java API (look at the simple examples in the NERDemo.java file included in the download, and then at the javadocs). Stanford NER code is dual licensed (in a similar manner to MySQL, etc.). Open source licensing is under the full GPL, which allows many free uses."<ref>[http://nlp.stanford.edu/software/CRF-NER.shtml Stanford Natural Language Processing Group: Software > Stanford Named Entity Recognizer (NER)], viewed 26/06/2016</ref> | ||

| + | |||

| + | [http://nlp.stanford.edu:8080/ner/ Online Stanford Named Entity Tagger] | ||

| + | |||

| + | [https://sergey-tihon.github.io/Stanford.NLP.NET/StanfordSUTime.html Stanford Temporal Tagger: SUTime for .NET] | ||

| + | |||

| + | - SUTime is a library for recognizing and normalizing time expressions. SUTime is available as part of the Stanford CoreNLP pipeline and can be used to annotate documents with temporal information. It is a deterministic rule-based system designed for extensibility. | ||

| + | |||

| + | SUTime was developed using TokensRegex, a generic framework for defining patterns over text and mapping to semantic objects. An included set of PowerPoint slides and the javadoc for SUTime provide an overview of this package. | ||

| + | |||

| + | SUTime annotations are provided automatically with the Stanford CoreNLP pipeline by including the ner annotator. When a time expression is identified, the NamedEntityTagAnnotation is set with one of four temporal types (DATE, TIME, DURATION, and SET) and the NormalizedNamedEntityTagAnnotation is set to the value of the normalized temporal expression. The temporal type and value correspond to the TIMEX3 standard for type and value. (Note the slightly weird and non-specific entity name SET, which refers to a set of times, such as a recurring event.) | ||

Latest revision as of 06:56, July 6, 2016

Team three: visualisation of historical data

Contents

Team summary

We will explore how visualisation techniques can be used by historians for multiple purposes - to improve the discoverability of data, to highlight and analyse linkages in data, and to aid the comprehension of data.

We will undertake an analysis of our own needs as historians and will explore how software designers have approached meeting those needs.

An explicit goal of team three is to understand the visualisation potential of the MarineLives full text corpus and to explore approaches to mining the data for visualisation applications.

We would like to explore the use an off-the-shelf Named Entity Recogniser to detect places, ships and dates, and to visualise the results in multiple ways and for multiple analytical purposes. We would like to compare this automated approach to the generation of tagged data to the hand extraction of geographical and other tagged data. We will build off earlier work done in collaboration with the Department of Informatics at the University of Mannheim.

Team members will have an opportunity to work with, and improve upon, a MarineLives dataset for C17th ship sailing times between ports and dwell time in ports

Stanford Named Entity Tagger

For an assessment of the accuracy of the Standford Named Entity Tagger and Standford Named Entity Recogniser using English High Court of Admiralty data see Dominique Ritze et al., Named Entities in Court: The MarineLives Corpus (May, 2014)

High Court of Admiralty dataset

[ADD DATA]

Visualisation tools

[ADD DATA]

Names Entity Recognisers

[ADD DATA

Useful Links

Natural Language Processing Wikipedia article

Dominique Ritze et al., Named Entities in Court: The MarineLives Corpus (May, 2014)

Colin Greenstreet, 'How long did it take?', The Shipping News blog article, Mat 22, 2014

Abby Mullen, Named Entity Extraction: Productive Failure? April 18th, 2015. Blog article

- Discusses automatic extraction of place names through named entity recognition, using the software package "r"

- Extracts place names from the 6-volume document collection 'Naval Documents Related to the United States Wars with the Barbary Powers'

- Identifies problem of confusion between place names and US naval ships named after places, e.g. the USS Chesapeake

Stanford Natural Language Processing Links

Jenny Rose Finkel, Stanford University, March 9, 2007

- Stanford CoreNLP provides a set of natural language analysis tools which can take raw English language text input and give the base forms of words, their parts of speech, whether they are names of companies, people, etc., normalize dates, times, and numeric quantities, and mark up the structure of sentences in terms of phrases and word dependencies, and indicate which noun phrases refer to the same entities. Stanford CoreNLP is an integrated framework, which makes it very easy to apply a bunch of language analysis tools to a piece of text. Starting from plain text, you can run all the tools on it with just two lines of code. Its analyses provides the foundational building blocks for higher-level and domain-specific text understanding applications.

Stanford CoreNLP integrates all Stanford NLP tools, including the part-of-speech (POS) tagger, the named entity recognizer (NER), the parser, the coreference resolution system, and the sentiment analysis tools, and provides model files for analysis of English. The goal of this project is to enable people to quickly and painlessly get complete linguistic annotations of natural language texts. It is designed to be highly flexible and extensible. With a single option, you can choose which tools should be enabled and which should be disabled.

Stanford Parser for ,NET (A statistical parser)

- A natural language parser is a program that works out the grammatical structure of sentences, for instance, which groups of words go together (as "phrases") and which words are the subject or object of a verb. Probabilistic parsers use knowledge of language gained from hand-parsed sentences to try to produce the most likely analysis of new sentences. These statistical parsers still make some mistakes, but commonly work rather well. Their development was one of the biggest breakthroughs in natural language processing in the 1990s. You can try out the Stanford parser online.

Stanford Natural Language Processing Group: Software > Stanford Named Entity Recognizer (NER)

- "Stanford NER is a Java implementation of a Named Entity Recognizer. Named Entity Recognition (NER) labels sequences of words in a text which are the names of things, such as person and company names, or gene and protein names. It comes with well-engineered feature extractors for Named Entity Recognition, and many options for defining feature extractors. Included with the download are good named entity recognizers for English, particularly for the 3 classes (PERSON, ORGANIZATION, LOCATION).

Stanford NER is also known as CRFClassifier. The software provides a general implementation of (arbitrary order) linear chain Conditional Random Field (CRF) sequence models. That is, by training your own models on labeled data, you can actually use this code to build sequence models for NER or any other task. (CRF models were pioneered by Lafferty, McCallum, and Pereira (2001); see Sutton and McCallum (2006) or Sutton and McCallum (2010) for more comprehensible introductions.)

The original CRF code is by Jenny Finkel. The feature extractors are by Dan Klein, Christopher Manning, and Jenny Finkel. Much of the documentation and usability is due to Anna Rafferty. More recent code development has been done by various Stanford NLP Group members.

Stanford NER is available for download, licensed under the GNU General Public License (v2 or later). Source is included. The package includes components for command-line invocation (look at the shell scripts and batch files included in the download), running as a server (look at NERServer in the sources jar file), and a Java API (look at the simple examples in the NERDemo.java file included in the download, and then at the javadocs). Stanford NER code is dual licensed (in a similar manner to MySQL, etc.). Open source licensing is under the full GPL, which allows many free uses."[1]

Online Stanford Named Entity Tagger

Stanford Temporal Tagger: SUTime for .NET

- SUTime is a library for recognizing and normalizing time expressions. SUTime is available as part of the Stanford CoreNLP pipeline and can be used to annotate documents with temporal information. It is a deterministic rule-based system designed for extensibility.

SUTime was developed using TokensRegex, a generic framework for defining patterns over text and mapping to semantic objects. An included set of PowerPoint slides and the javadoc for SUTime provide an overview of this package.

SUTime annotations are provided automatically with the Stanford CoreNLP pipeline by including the ner annotator. When a time expression is identified, the NamedEntityTagAnnotation is set with one of four temporal types (DATE, TIME, DURATION, and SET) and the NormalizedNamedEntityTagAnnotation is set to the value of the normalized temporal expression. The temporal type and value correspond to the TIMEX3 standard for type and value. (Note the slightly weird and non-specific entity name SET, which refers to a set of times, such as a recurring event.)